An industry seemingly incapable of having a quiet day off, SEO has been turned on its head (again).

And this time, it comes in the form of leaked documentation for Google Search’s Content Warehouse API. This documentation – consisting of 2,500 pages and detailing over 14,000 attributes – provides direct information about the information that Google collects, stores and potentially uses within its algorithm.

A leak of this scale is pretty unprecedented, and gives us a view of the underlying mechanisms of Google rankings at much greater depth than we’ve seen before.

But why should digital PRs give a shit about this leak?

Any information about Google’s ranking algorithm is invariably linked to digital PR, and especially the importance of links as a ranking factor.

And whilst the end goal of digital PR reaches far beyond link numbers alone, and extends to improving sites’ organic visibility and rankings, there’s no getting away from the fact that links matter.

This leaked documentation provides more tangible proof than we’ve ever had before of what elements of digital PR provide the biggest impact on sites’ visibility and how, most importantly, Google could value certain types of links differently to others.

But for any digital PRs who don’t fancy reading through 2,500 pages of documentation, we’ve summarised some key takeaways below.

James Brockbank, Digitaloft’s Founder & Managing Director and myself, Digitaloft’s SEO Lead, have spent the past few days digging deep into the leaked documents to understand what they tell us that’s relevant to digital PR and links, in particular.

These findings aren’t comprehensive. There’s a lot to look through and there’s probably more we’ll find in the coming days. But it’s a good start, and safe to say there’s a lot of valuable takeaways.

Google’s leaked documentation: a summary

Google’s API documentation appears to have been leaked from GitHub, with documents being made available to the public for a short period of time.

It’s important to note what this documentation is and what it isn’t. It’s home to the features that Google collects, stores and manipulates around content, links and user behaviour. These features are not (necessarily) ranking factors. There is no information within this documentation that suggests how Google uses each attribute, and to what extent.

Caveats aside, there is a wealth of information to be gleaned from this leaked documentation. Great analyses from Mike King and Rand Fishkin have led the way in summarising the key takeaways, which include:

- Information on what data Google stores, which goes directly against past statements from Google representatives;

- A much more in-depth description of NavBoost and the ways in which Google uses click signals in its ranking algorithms;

- Evidence of a “sandbox” for freshly-created sites, based on the attribute “hostAge”;

- Existence of whitelisted sites for domains providing legitimate information around politics and Covid, based on the attributes “isElectionLocalAuthority” and “isCovidLocalAuthority”;

- A series of algorithmic demotions, including Anchor Mismatch, Nav Demotion, and Exact Match Domains Demotion.

…and this is only just scratching the surface.

But what does all this mean for digital PR?

What digital PRs can learn from the leaked documents

These leaked documents tell us A LOT about what Google’s ranking systems likely are, and aren’t, using as signals and ranking factors.

But one area of SEO that we’ve gained a massive insight into is links. And whilst a lot of the information contained within these documents isn’t new news, it gives confirmation on a lot of things the industry has suspected or assumed.

We’ve been through the documents and analysed these through the lens of link building and digital PR.

There’s an awful lot in there that digital PRs, especially, can take away from this leak, both in terms of how Google (still) uses links as an important ranking factor as well as things that give us an indication into how important both brand and author signals are, as well as the hottest topic in the industry right now, relevancy. We’ve been working our way through references to these things in the 14,000 ranking features in the documents to share everything you need to know from these as a digital PR and the key things to take away.

Whilst, as SEOs, we talk about links (backlinks, internal links and outbound links), Google’s documentation refers to these as anchors.

Local anchors = internal links

Non-local anchors = backlinks

We’ll be focusing mostly on non-local anchors in this post.

There’s a lot for us to look at in the documentation in relation to anchors, but here, we’ve taken a look at some of the things that stood out to us the most, and those that we believe to be the most important ones to know about.

1. Google could be ignoring links from within content that’s not relevant

Relevancy in digital PR is maybe one of the hottest topics in the industry right now, and its definition continues to divide marketers.

And whilst here isn’t the place to debate what is and isn’t relevant (I’ll let you decide on that one for now), the leaked documents give us a very clear view that Google could be ignoring links from within content that isn’t deemed to be relevant.

Let’s pay attention to the ‘anchorMismatchDemotion’ attribute referenced in the ‘CompressedQualitySignals’ module.

Whilst we don’t have any other specifics about what this demotion is, it’s clear that it’s intended to ‘demote’ (ignore) anchors (links) that are a mismatch between the source and the target.

The only thing we don’t really know is what this is a mismatch of, but we can pretty safely assume that this is relevancy.

For starters, there’s few other things that could be seen as a match or mismatch between a link’s source and target, but at the page-level we also see an attribute of ‘topicEmbeddingsVersionedData’ within this same module.

What are topic embeddings?

They’re a type of representation used in natural language processing (NLP) to capture the semantic meaning of topics within documents, in this instance webpages. They transform topics into continuous vector spaces, where semantically similar topics are located closer together.

We don’t need to understand these in depth to see that this is, in effect, the topics of a webpage.

We also see, in the ‘PerDocData’ module the ‘webrefEntities’ attribute.

This one’s a little easier to understand. It’s the entities associated with the page.

It makes total sense, therefore, that the mismatch demotion happens when the link source and target pages aren’t topically aligned.

How aligned? We don’t know. But usually, common sense is all it takes to determine whether or not a link is relevant. Further analysis can be carried out using NLP to take a closer look at the topics a page is relevant to.

The learning here is pretty simple. Relevant links matter a lot. If you’re earning links from within content that isn’t topically relevant, there’s a chance these links are being ignored and, therefore, having no impact on your rankings.

My guess here is that this attribute, in practice, would not be as simple as it appears here. I’m doubtful that the ‘anchor mismatch’ would be as straightforward as being ‘page-level’ to ‘page-level,’ given that it’s not unnatural for pages that aren’t always the closest-match from a topicality perspective to get linked to.

I’m considering this in the context of relevancy at a level that sits higher than the page-level. Without further context, I’ll assume the anchor mismatch is between the source page and the target domain.

What we do know, though, is that Google can define how focused a site is on one topic.

Let’s look at the ‘QualityAuthorityTopicEmbeddingsVersionedItem’ module.

Here, we see this defined as ‘Message storing a versioned TopicEmbeddings scores. This is copied from TopicEmbeddings in docjoins.’

Within this, we see an attribute of:

- pageEmbedding

- siteEmbedding

- siteFocusScore

- siteRadius

Could this be indicating that Google is measuring how closely a site is targeted to a single topic? As well as the fact that this can be considered at the site and page levels?

I’m particularly interested in the last two that suggest a measure of the deviation between page-level and site-level topicality.

My thoughts on the anchorMismatchDemotion being based around this sort of radius measure start to make more sense.

Could link relevancy be considered at the ‘site_embedding’ level? It’s definitely possible and suggests you should focus on earning links relevant to your site’s topics as a whole, rather than getting too bogged down with page to page relevancy. Instead, think page to domain.

2. Links from sites in the same country as yours are important

Within the ‘AnchorsAnchorSource’ module is an attribute called ‘localCountryCodes.’

If Google is storing the countries to which the source page is the most relevant, this could suggest that those that match the countries to which the target page (the page or site being linked to) is most relevant are weighted stronger than links from sources where the country is not the same.

If this is correct, a link from DailyMail.co.uk (a site most relevant to the UK) would be weighted stronger for a website of a business in the UK than a link from an .fr (French) publication, for example.

Whilst this isn’t necessarily anything groundbreaking in terms of understanding the type of links most likely to positively influence search rankings, it’s definitely confirmation that this should be a consideration.

Whilst I don’t believe a site would see links demoted (ignored) when the most relevant countries don’t match, it makes sense that those where there is a match would be weighted stronger.

The takeaway? You want relevant links that primarily come from your country. If you operate in multiple territories, then there would be multiple countries that are relevant, depending on how the site is set up. For example, if you’re using country-specific subdirectories, defined with hreflang, then we could assume that the country-specific relevancy would exist at this level.

3. Google tags links coming from high-quality news sites

In the ‘AnchorsAnchor’ module, we see reference to an attribute called ‘encodedNewsAnchorData.’

We don’t know what this specifically means, but we do see the definition as referencing links coming from ‘newsy, high-quality sites.’

It’s easy, as PRs, for us to assume that this means the same as what we consider to be a news site. It might not. We don’t have actual confirmation of this. But we do have a clear reference to high-quality sites.

If Google can tag links that come from these high-quality news sources, it means they could be using them within the weighting of ranking systems.

But let’s not get ahead of ourselves. This attribute is about encoding information about the newsiness of a link. This could mean a number of things.

What’s interesting though is the reference to this only being populated if the linking site is a newsy, high-quality one, showing they’re able to make this differentiation.

However this is used and whatever information gets embedded, this definitely goes a long way to helping to justify digital PR as a link acquisition tactic, given that Google can specifically tag links from news sites.

4. The content surrounding a link helps to give context

While anchor text is one signal as to the context of a link, so is the surrounding content on the source page.

In the ‘AnchorsAnchor’ module, we see a number of attributes that relate to the content that is in close proximity to the link.

- context2

- fullLeftContext

- fullRightContext

We only see a definition for context2, but this gives us enough insight into what these attributes are:

The anchor text of a link isn’t the only way to understand the context of a link. And given that this was historically manipulated, it makes sense that the content surrounding a link is used to understand the context of a link.

This becomes even more important to understand when so many links, quite rightly, use branded or generic anchor text.

What does this mean? Again, it comes back to relevancy. Earning links from within relevant content will result in content that surrounds the link giving context. This should be a given with digital PR, but it helps to justify why this is such a powerful tactic compared to others.

It could also (and I stress, could; I’m thinking aloud here, not basing it off evidence) mean that a link that uses branded anchor text, but that has surrounding text that defines who the brand is (e.g. ‘online bank, Starling’) could be weighted stronger than a branded link that isn’t surrounded by the same level of context.

We don’t know. And probably never will for sure. But it would be an interesting test to try to isolate the impact that the text surrounding a link has on its impact.

5. Links from seed sites, or those linked to from them, likely carry more weight

Last year, James wrote about how seed sites and link distance ranking and how it’s an important concept for digital PRs to understand.

And we see evidence within the documentation of this.

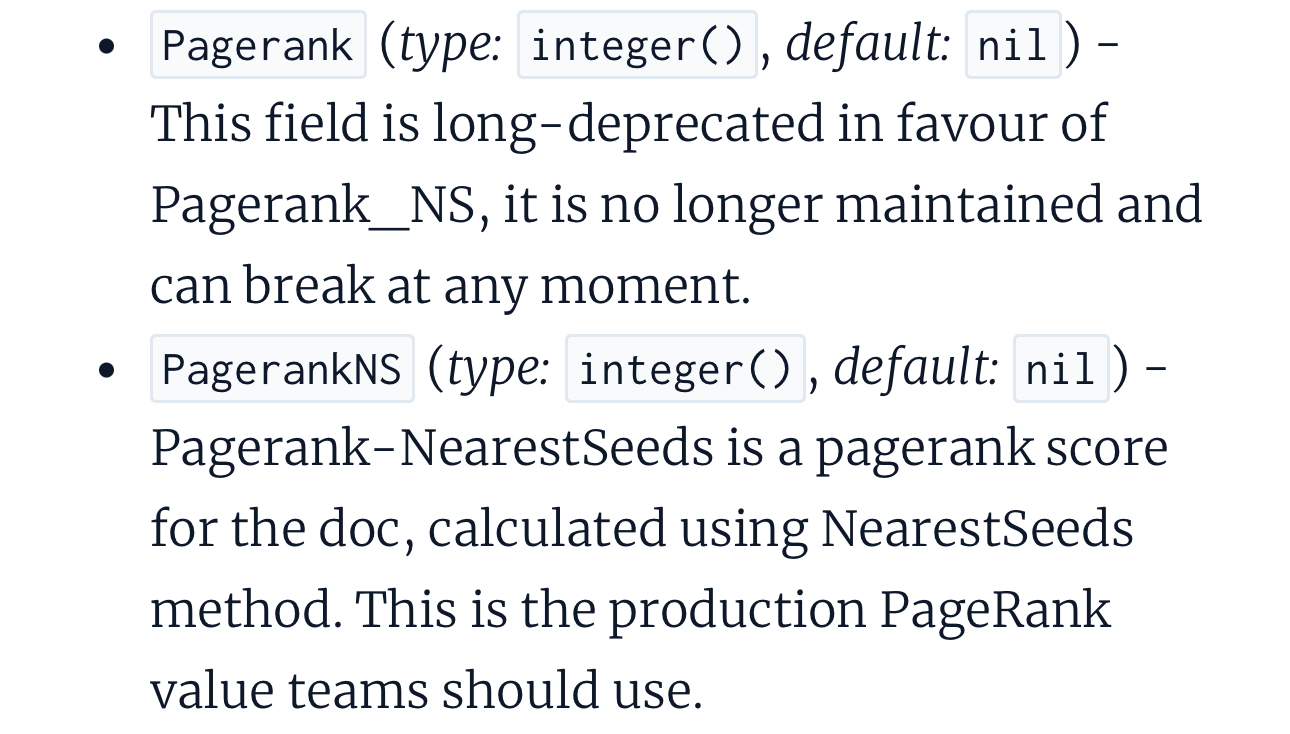

We see a clear reference to PagerankNs (PageRank-NearestSeeds) being the production PageRank value to be used, rather than PageRank, which is referenced as “long-deprecated”.

If you’re not familiar with the concept, have a look at the guide I linked above to explore it in a lot more detail. But in short, this is based around Google using a set of ‘trusted seed sites’ such as The New York Times. The ideal is that the closer a site is (fewer links away from) one of these trusted seed sites, the more valuable a link is.

We don’t know what sites are in the trusted seed set, Google has only ever disclosed The New York Times and the now-defunct Google Directory, but these are likely a topic’s most authoritative publications.

Again, this suggests why links earned with digital PR are the most valuable links you can get, with this typically being based around tactics which see you linked to from trusted, authoritative sites.

6. SiteAuthority could be Google’s version of Domain Authority and Domain Rating

Ah, domain authority (or domain rating, authority score, or whatever your metric of choice is).

For years, it’s been one of the most talked about and controversial topics in digital PR.

To recap, the argument has long been that domain authority was an arbitrary metric invented by Moz to provide another way to contextualise linkbuilding success (or, if you’re a skeptic, to sell their tool). Other tools, like Semrush and Ahrefs, have their own versions that show largely the same thing – the growth in authority or strength of a given domain, largely based on links.

There have been a few arguments against the over-reliance on domain authority as a metric over the years, but the main one has always come down to this: Google doesn’t measure domain authority.

Or do they?

Google representatives have outright said in the past that they “don’t have anything like a website authority score” (you can see those exact words coming from John Mueller’s mouth in this video from 2017).

But lo and behold, Google’s leaked documentation tells a different story.

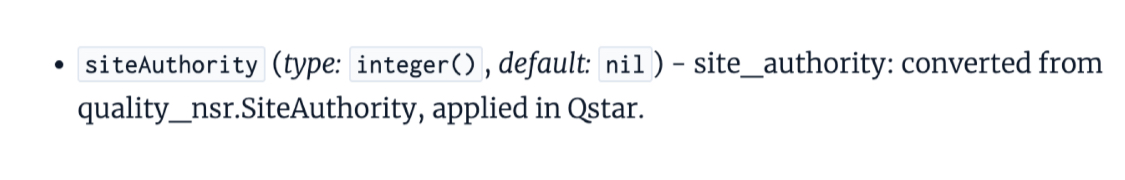

The CompressedQualitySignals module clearly shows a feature labelled siteAuthority, which we can only presume is an equivalent version of domain authority/rating (although it may not be solely a link-based score, since this is what PageRank is). We, of course, don’t know anything about how this is computed, or the extent to which (if at all) it is used in Google’s ranking systems.

The closest clue we get is a feature called authorityPromotion, which clearly insinuates the existence of siteAuthority as a QualityBoost promotion.

So what does this mean for Digital PR?

It means that, to some extent, authority matters. Google has a measure for it, and it is housed within the Quality Signals documentation. We’ll come back to this throughout some more of our key takeaways, but it’s further confirmation (in case we needed any more) that the quality of links is far more important than the quantity.

This, of course, then ties in with our earlier point that Google has a specific demarcation for newsy sites. We can assume here that links from these “high-quality newsy sites”, as defined by Google, have some ability to boost site authority.

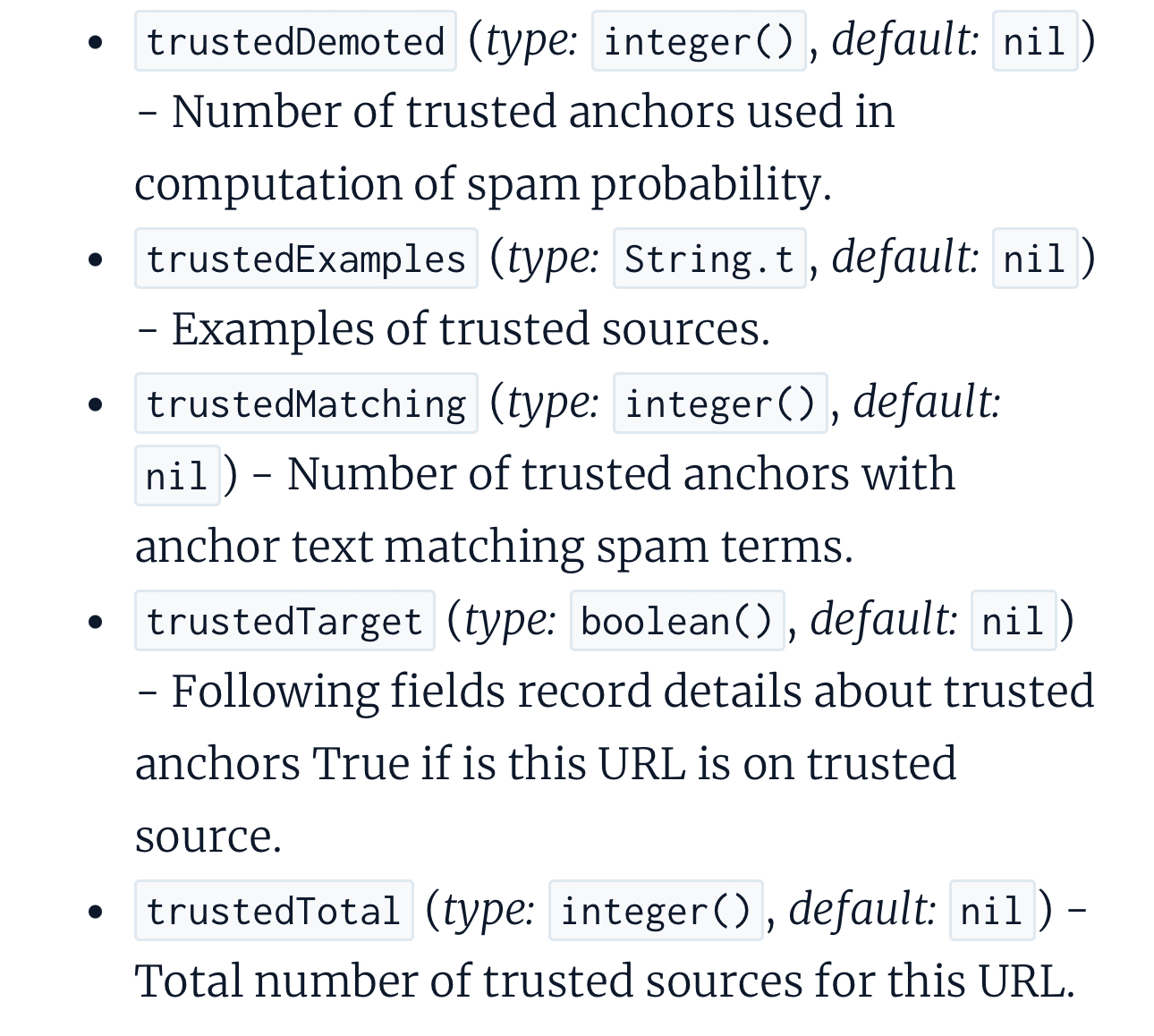

7. ’Trusted sources’ could be used when determining the likelihood of a page being spam

Following on from the point above about siteAuthority, we see multiple references to ‘trusted sources’ within the documentation in the context of links (anchors). Specifically, we see the following attributes:

- trustedDemoted

- trustedExamples

- trustedMatching

- trustedTarget

- trustedTotal

It seems likely that Google could be referencing the total number of links from trusted sources to a particular URL, a ‘true’ or ‘false’ value assigned to links that come from trusted sources and, interestingly, the number of links from trusted sources with anchor text that matches spam terms.

We don’t know for sure what counts as spam terms in the context of anchor text, but there’s a good chance this is ‘exact match’ anchor text that uses keywords in the way that many link builders spammed with in the pre-Penguin days.

Could this mean that, in the event that you’re able to earn a link from a trusted source that uses keyword-rich anchor text, that the fact the linking site is a trusted source prevents this from flagging as spammy? Even though the anchor text itself may be? We can’t be sure, but it seems probable.

It also seems probable, given the ‘spamProbability’ attribute, that Google is using a calculation based on the count of links from trusted sources against those that aren’t to determine the likelihood of a page being spam.

What does this mean? Again, that earning links from authoritative, trustworthy sites, such as those that typically come about as a result of digital PR activity, is a key activity that brands should be leveraging as part of their wider SEO strategy.

8. The indexing tier of a link impacts its value

Let’s have a quick recap on indexing tiers before we dive into this one.

Google’s link index can be divided into tiers. The most important, most regularly-accessed and regularly-updated content is stored in flash memory. Less important content is stored on solid state drives, while even lesser important or out-of-date content is stored on standard hard drives, as confirmed by Gary Illyes on Google’s Search Off The Record podcast.

The existence of a sourceType attribute records the quality of the source of a link, in correlation with the tier in which the content exists. In simple terms, this means that the higher the indexing tier of a page, the higher the value of the links we would expect to see come from it.

This sourceType metric marks anchors (links) as either TYPE_HIGH_QUALITY, TYPE_MEDIUM_QUALITY, OR TYPE_LOW_QUALITY, based on the indexing tier of the link. It then goes on to say that sourceType “can be used as an importance indicator of an anchor”, giving us clear indication that Google is using this information to determine the relative importance.

Another point to note here is the value that Google places on content freshness. The documentation explicitly states that “TYPE_FRESHDOCS […] is a special case and is considered the same type as TYPE_HIGH_QUALITY for the purpose of anchor importance”.

This further highlights the importance of consistently earning high-quality links, given that the freshness of links places the link source into the ‘high-quality’ grouping.

Consistently running digital PR activity to earn high-quality links on a regular basis just became even more important now that we can see how freshness is likely an important factor.

We also see ‘indexTier’ referenced within the ‘Anchors’ module:

Ultimately, this means that you want your links to be coming from fresh, high-quality content – which reinforces why we as digital PRs place such importance on obtaining links from high-ranking sites, particularly news outlets. These links genuinely are more valuable!

9. The authors of your content and the experts you use as spokespeople matter

You can’t go anywhere in the world of SEO these days without hearing about E-E-A-T, and of course, one key element of this is authorship.

Some SEOs have struggled with the concept of E-E-A-T over the years – it’s a quality signal, rather than a ranking factor, and it’s difficult to quantify if you’re doing a good job or not.

One thing we have learnt from this leak is that Google explicitly stores information on the authors associated with a document.

What’s more, there is also cross-referencing to check if the entity on a page is indeed the author of the document.

While Google states this is mainly developed for news articles and scientific journals, it’s safe to say that paying close attention to authors for blog content and expert quotes in the media, and keeping these consistent, is a good idea from a digital PR perspective.

10. Links from pages with traffic are likely more impactful than those without

We’ve long said that digital PR should be about more than just building links, and that the most effective strategies will earn links as a byproduct of creating, doing or sharing something that people want to link to.

And when you’re executing digital PR activity in a way that promotes your brand and its people first and foremost, and earns links as a result of doing this, these links are far more likely to result in you getting links on pages that actually get traffic.

Why?

Because when you leverage PR, the tactic is pretty much exclusively about pitching out to the press. And these type of sites typically get (lots of) traffic

And we can see from the leaked documentation that links on pages that get traffic likely matter more than those that don’t.

In addition to the sourceType and indexTier attributes we defined above, where we also see a totalClicks one in the ‘RepositoryWebrefDocumentMetadata’ module.

In Rand’s write up on SparkToro, his source claimed that Google uses the clicks to a page to determine which tier it is placed in.

If a page gets no clicks, it’s placed into the ‘low quality’ tier. On the other hand, high volumes of clicks would see it placed in the ‘high-quality’ tier.

Links from pages in the low quality tier don’t pass PageRank it is believed. Therefore, these links aren’t contributing to increased rankings, or the page’s Quality Score.

Again, digital PR as a tactic is set up to see you land links from pages that get clicks. Other link building tactics, in comparison, aren’t.

This could explain why links earned with digital PR typically have a much stronger impact on rankings than other tactics, where the links earned aren’t on pages that get significant clicks.

11. A link’s value depends on how much Google trusts the site’s homepage

Within the ‘AnchorsAnchorSource’ module, we see reference to an attribute called ‘homePageInfo.’

Being referenced within the anchor source module, we know this is in relation to the source of links, rather than the target. Therefore, we can assume that this could be used to determine the weight of a link, with these being classified as either being fully trusted, partially trusted or not trusted.

The way I’m interpreting this is that this attribute is filled for every source page, but is only given a ‘trust’ value if it’s the homepage. Other pages on the site, those tagged with ‘not_homepage,’ would then inherit the homepage’s trust value, or at least this is what would be used in a weighting calculation.

Again, this just goes to show the importance of earning links from trusted sites. And whilst we don’t know what sites fall into which trust value, it’s safe to assume that high-quality news sites class as fully trusted.

12. Pay attention to your content’s dates

No, this is not Tinder advice 101: pay attention to the dates on your published content!

And given that PRs are known for updating content on a regular basis to re-angle it every year, for example, this becomes an interesting observation.

We’ve learnt from this leak that Google’s storage of timestamps for content is comprehensive.

One key takeaway from the documentation as a whole is that Google gives considerable value to fresh, up-to-date content. To get an accurate measure of this, it records:

date

bylineDate – the date explicitly set on a page, that will be shown in snippets in search results.

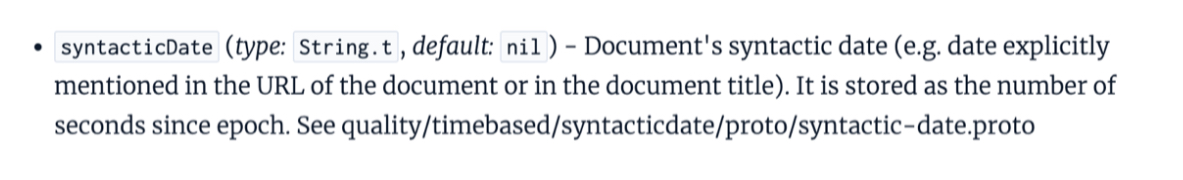

syntacticDate – the date explicitly mentioned in the URL or page title.

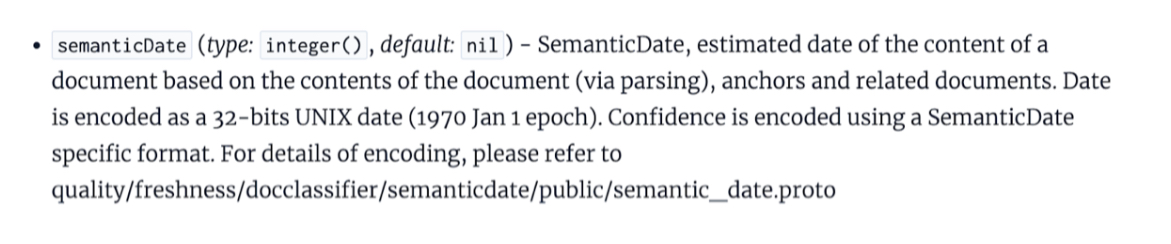

semanticDate – the estimated date of the content based on the contents of the document, links and related documents.

While there is no mention of any date-related demotions, keeping the dating of your content consistent across all of Google’s date measurements can only be best practice.

For example, if you publish a PR campaign on your site that updates annually, it’s important to keep those dating conventions consistent. Having a URL of /pr-index-2021, with a title including “2023” and a “last updated January 2024” could harm that page’s ability to rank and pass equity throughout your site, bottlenecking the effectiveness of your campaign. Instead, I’d steer clear from including dates in campaign URLs altogether, and update any dates within the content each time you refresh the content.

Liv’s takeaways for digital PRs in light of the leaked documents

While this leak is unlike anything we’ve seen in the industry for decades, I think it’s important to keep things in perspective and not jump to conclusions about what is or isn’t a ranking factor.

There are a lot of attributes referenced within the API documentation, but this is simply information that Google collects and stores data on, it’s not confirmation that they’re ranking factors.

That said, I think there is a lot of information here that directly translates into tangible action for both PRs and SEOs, and valuable confirmation of why we do what we do.

High-quality links from within fresh content on credible domains should always be prioritised, as well as contextual relevancy. The days of chasing huge volumes of links for the sake of it are well and truly behind us, and this documentation is the final nail in the coffin.

James’ takeaways for digital PRs in light of the leaked documents

There are lots of interesting takeaways within the leaked documentation, but for me, the standout takeaways are based around the need to have a clear strategy in terms of what to expect from your digital PR activity.

We can see clearer than ever that Google is likely rewarding relevant links and ignoring ones that aren’t. But there’s also evidence to suggest that links from sites in your own country are more valuable than those from overseas.

In a lot of cases, the documents contents make sense. After all, it shouldn’t be a surprise that relevant links from within your country should be rewarded more than those that aren’t relevant at all. Should it?

One thing that really stood out, though, is that links from pages with traffic are likely rewarded more than those from pages that get very little. In my opinion, this should be the case and should never have been questioned.

More than anything, though, this leak confirms what I’ve known for quite some time now; that digital PR is a tactic that can earn better links than pretty much any other link building tactic, and that it’s something that every brand should be investing into.